With the introduction of RTX, Nvidia has brought support for real-time Ray Tracing in games, which transforms the way light behaves in the environment of the games. We have already compared the two forms of rendering, Ray Tracing and Rasterized Rendering, in detail and all-in-all Ray Tracing does seem like the way to go as far as the future of gaming is concerned. Nvidia has also included specialized cores dedicated to Ray Tracing in their RTX cards known as RT Cores, which handle the bulk of the rendering workload when it comes to Ray Tracing in games. What most people might not know though, is that Nvidia has also introduced another set of cores with their Turing and Ampere cards known as Tensor Cores.

Tensor Cores

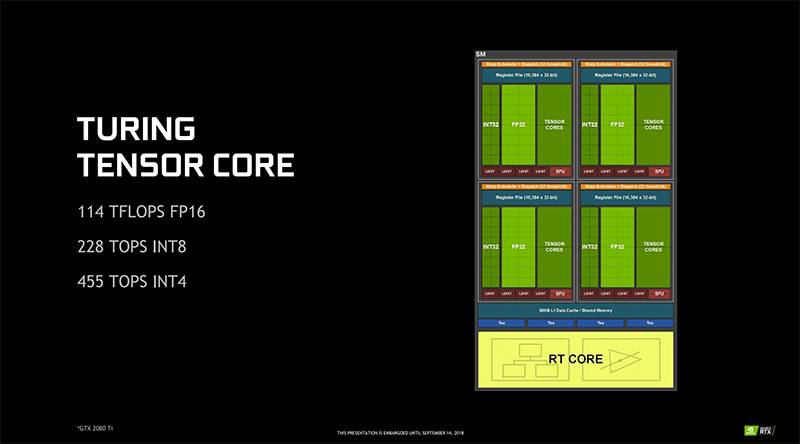

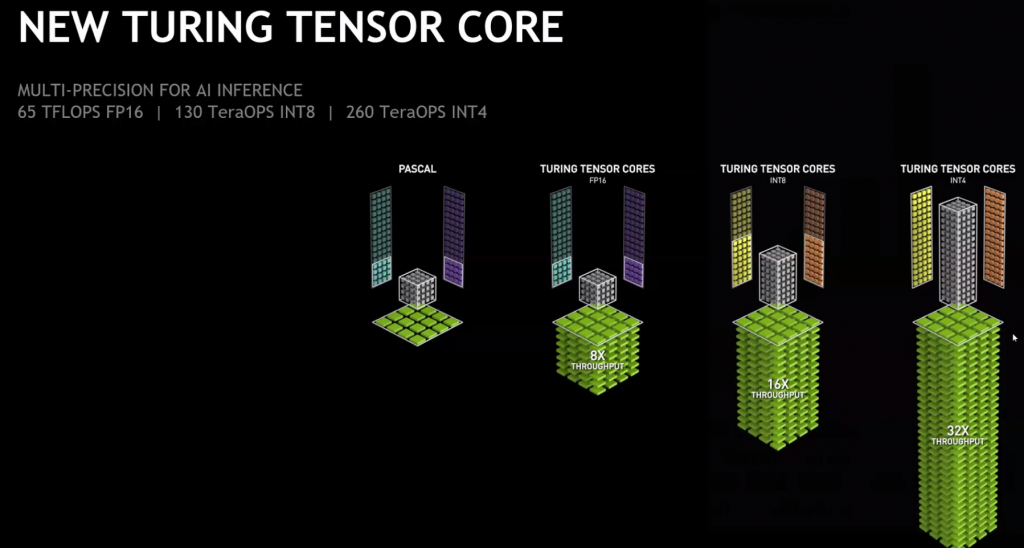

Tensor Cores are physical cores that are dedicated to complex computations involved in tasks such as machine learning and AI. Tensor Cores enable mixed-precision computing, dynamically adapting calculations to accelerate throughput while preserving accuracy. These cores have been specifically designed to help with these complex workloads in order to make these computations more efficient, as well as to relieve the main CUDA cores of the card of the extra burden. In consumer cards such as the gaming-focused GeForce series of cards based on the Turing or Ampere architecture, the Tensor Cores do not specifically have a rendering job. These cores do not render frames or help in general performance numbers like the normal CUDA cores or the RT Cores might do. The presence of Tensor Cores in these cards does serve a purpose. These cores handle the bulk of the processing power behind the excellent Deep Learning Super Sampling or DLSS feature of Nvidia. We will explore DLSS in a minute, but first, we have to identify which cards actually possess Tensor Cores in the first place. As of the time of writing, there are only a handful of cards that feature Tensor Cores in them. Nvidia first integrated the Tensor Cores into the Nvidia TITAN V which was a workstation card based on the Volta architecture. This architecture was never scaled down to consumer-level graphics cards and thus the Volta architecture was never seen in a GeForce GPU. After that, Nvidia introduced the Tensor cores in a bunch of Quadro GPUs, and more importantly for gamers, the RTX cards based on the Turing and Ampere architecture. This means that all the RTX- branded graphics cards from the RTX 2060 all the way to the RTX 3090 have Tensor Cores and can take advantage of Nvidia’s DLSS feature.

How Do Tensor Cores Work?

While the actual process behind the working of a Tensor Core is quite complicated, it can be summarized in three points.

Tensor Cores reduce the used cycles needed for calculating multiply and addition operations, 16-fold — in my example, for a 32×32 matrix, from 128 cycles to 8 cycles.Tensor Cores reduce the reliance on repetitive shared memory access, thus saving additional cycles for memory access.Tensor Cores are so fast that computation is no longer a bottleneck. The only bottleneck is getting data to the Tensor Cores.

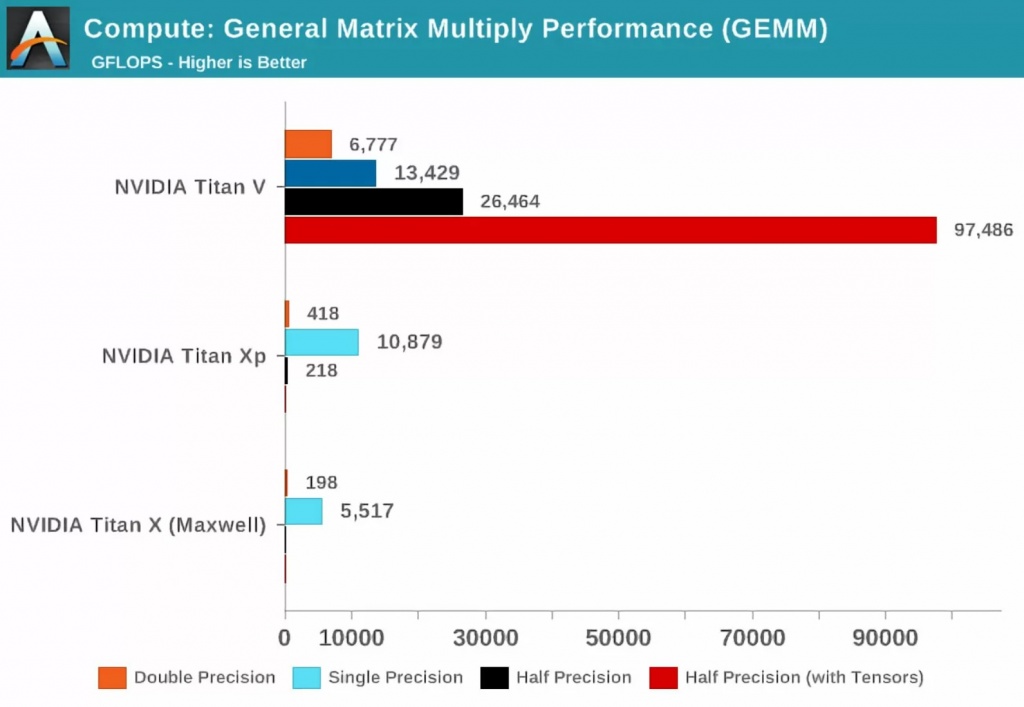

In simple words, Tensor Cores are used to perform extremely complex calculations that will take other non-specialized cores such as CUDA cores an unreasonable amount of time to perform. Due to their specific nature Tensor Cores are clearly excellent at performing this kind of work. In fact, when Volta first appeared, Anandtech carried out some math tests using 3 Nvidia cards. The new Volta card, a top-end Pascal graphics card, and an older Maxwell TITAN card were all thrown in the mix and these were the results. In this chart, the term precision refers to the number of bits used for the floating points numbers in the matrices with double being 64, single being 32, and so on. This result clearly shows that the Tensor Cores are far ahead of the standard CUDA cores when it comes to specialized tensor computations such as this one.

Applications

But what exactly are the applications of these Tensor Cores? Since Tensor Cores can speed-up complex processes such as AI Training by as much as 10 times, there are several areas in AI and Deep Learning that Tensor Cores can be useful. Here are some common areas where Tensor Cores can be utilized.

Deep Learning

One area where Tensor Cores and the cards that have them can be especially beneficial is the field of Deep Learning. This is actually a subfield of machine learning which is concerned with algorithms inspired by the structure and function of the brain called artificial neural networks. Deep Learning is a vast field that covers a whole host of interesting subject areas. The core of deep learning is that now we have fast enough computers and enough data to actually train large neural networks. This is where the Tensor Cores come in. While normal graphics cards might suffice for a small-scale operation or on an individual level, this process requires a lot of specific computation horsepower when it is implemented on a larger scale. If an organization such as Nvidia itself wants to work on Deep Learning as a field, then graphics cards with the specific computational powers of Tensor Cores become a necessity. Tensor Cores handle these workloads much more efficiently and quickly than any other form of computing core currently available. This specificity makes these cores and the cards that contain them a valuable asset for the Deep Learning industry.

Artificial Intelligence

We have all seen the movies. Artificial Intelligence is supposed to be the next big thing in the field of computing and robotics. Artificial Intelligence or AI refers to the simulation of human intelligence in machines that are programmed to think like humans and to perform similar actions. Traits such as learning and problem-solving also fall under the category of artificial intelligence. It should be noted that artificial intelligence is not only limited to the intelligence in machines as we have seen in the movies. This type of intelligence is actually very common in several applications nowadays. Our virtual assistants in our mobile phones also use a form of artificial intelligence. In the world of gaming, all the computer-generated and controlled enemies and NPCs also exhibit a certain level of artificial intelligence. Anything that has human-like tendencies or behavioral nuances within a simulated environment is making use of artificial intelligence. The field of artificial intelligence also requires a great deal of computational specificity and it is another area where graphics cards powered by Tensor Cores definitely come in handy. Nvidia is one of the world’s leaders when it comes to AI and Deep Learning, and their products like the Tensor Cores and features like Nvidia’s-famed Deep Learning Super Sampling are a testament to their position.

Deep Learning Super Sampling

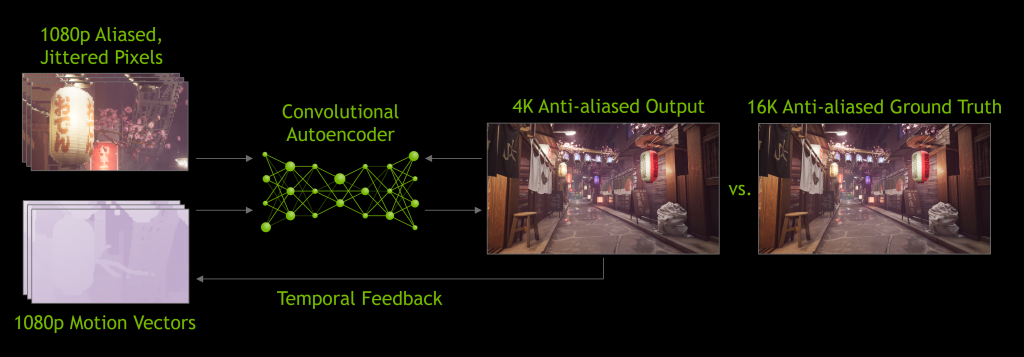

DLSS is one of the best applications of Tensor Cores currently found in the industry. DLSS or Deep Learning Super Sampling is Nvidia’s technique for smart upscaling, which can take an image rendered at a lower resolution and upscale it to a higher resolution display, thus providing more performance than native rendering. Nvidia introduced this technique with the first generation of the RTX series of graphics cards. DLSS is not just a technique for ordinary upscaling or supersampling, rather it uses AI to smartly increase the quality of the image that was rendered at a lower resolution in order to preserve the image quality. This can, in theory, provide the best of both worlds as the image displayed would still be high quality while the performance will also be improved over native rendering. DLSS harnesses the power of AI to smartly calculate how to render the image at a lower resolution while keeping maximum quality intact. It uses the power of the new RTX cards to perform complex computations and then uses that data to adjust the final image to make it look as close to native rendering as possible. The hallmark feature of DLSS is its extremely impressive conservation of quality. Using traditional upscaling using the game menus, players can definitely notice a lack in sharpness and crispness of the game after it has been rendered at a lower resolution. This is a non-issue while using DLSS. Although it does render the image at a lower resolution (often as much as 66% of the original resolution), the resulting upscaled image is far far better than what you would get out of traditional upscaling. It is so impressive that most players cannot tell the difference between an image natively rendered at the higher resolution, and an image upscaled by DLSS. The most notable advantage of DLSS and arguably the entire incentive behind its development is the significant uplift in performance while DLSS is turned on. This performance comes from the simple fact that DLSS is rendering the game at a lower resolution, and then upscaling it using AI in order to match the monitor’s output resolution. Using the deep learning features of the RTX series of graphics cards, DLSS can output the image in a quality that matches the natively rendered image. Nvidia has explained the mechanics behind its DLSS 2.0 technology on its official website. We know that Nvidia is using a system called the Neural Graphics Framework or NGX, which uses the ability of an NGX-powered supercomputer to learn and get better at AI computations. DLSS 2.0 has two primary inputs into the AI network:

Low resolution, aliased images rendered by the game engineLow resolution, motion vectors from the same images — also generated by the game engine

Nvidia then uses a process known as temporal feedback to “estimate” what the frame will look like. Then, a special type of AI autoencoder takes the low-resolution current frame, and the high-resolution previous frame to determine on a pixel-by-pixel basis how to generate a higher quality current frame. Nvidia is also simultaneously taking steps to improve the supercomputer’s understanding of the process:

Future Applications

As we can see from the applications such as deep learning, artificial intelligence, and especially the DLSS feature that Nvidia has now introduced, the Tensor Cores of these graphics cards are performing a lot of interesting and important tasks. It is difficult to predict what the future holds for these fields but one can definitely make an educated prediction based on current data and industry trends. Once the network is trained, NGX delivers the AI model to your GeForce RTX PC or laptop via Game Ready Drivers and OTA updates. With Turing’s Tensor Cores delivering up to 110 teraflops of dedicated AI horsepower, the DLSS network can be run in real-time simultaneously with an intensive 3D game. This simply wasn’t possible before Turing and Tensor Cores.” Currently, the global push in fields like artificial intelligence and machine learning is at an all-time high. It is safe to assume that Nvidia will expand its lineup of graphics cards that include the Tensor Cores in the near future, and those cards will come in handy for these applications. Furthermore, DLSS is another great application of the deep learning technologies that utilize the Tensor Cores, and that will probably also see big improvements in the near future. It is one of the most interesting and most productive features to hit the PC Gaming industry in recent years so one has to assume that it is here to stay. With the power of the Tensor Cores, advances in the fields of machine learning and artificial intelligence are being made at a rapid pace. This process will most likely continue and be amplified with companies like Nvidia taking charge and leading the PC Gaming industry when it comes to applying the knowledge of these fields in the games that we play.

Deep Learning Super Sampling (DLSS 2.0) ExplainedNvidia RT Cores vs. AMD Ray Accelerators – ExplainedNVIDIA Releases Deep-Learning Dynamic Super Resolution, The AI-Powered Version…5 Best Programs for Learning Spanish