The RTX 3080 had a memory buffer size of 10GB, while the RTX 3090 has a massive 24GB memory buffer size. The relatively unimpressive memory size of the RTX 3080 caused quite a bit of concern among the Nvidia faithful, especially considering that the older RTX 2080Ti flagship had 11GB of VRAM. Soon after, AMD released their brand new RX 6000 series graphics cards that all had 16GB of VRAM, although they sported the slower GDDR6 memory modules. AMD, in their promotional material, also pointed towards the possibility of modern games using more than 10GB of VRAM on resolutions such as 4K. Shortly after, Nvidia released the RTX 3060, which was a mid-range graphics card, but it weirdly had 12GB of VRAM. This only helped to muddy the waters even more.

Memory Size Debate

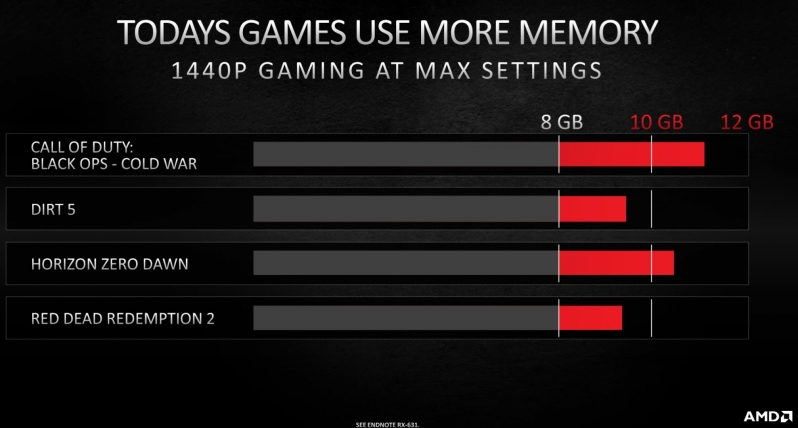

Due to the nominal size of the RTX 3080’s VRAM buffer, many enthusiasts pointed out that modern games might require more than 10GB of VRAM under certain scenarios. Especially in higher resolutions like 4K, many games tend to cross the 8GB and 10GB VRAM limits when they are loaded with high-quality assets. The debate was intensified when many media outlets also provided examples of games like Doom Eternal and Resident Evil that were consuming more than 10GB of VRAM at 4K. Nvidia faithful, on the other hand, replied with the clever observation that many of these games actually allocated more VRAM than they needed, and thus were not actually using more than 10GB of VRAM at a time. VRAM Allocation is a slightly complicated concept that works differently in each different game, but basically, it means that the game takes all the VRAM that is available and fills it with assets that might be needed later. This is a strong argument as many games such as Call of Duty Modern Warfare will even allocate more than 20GB of VRAM if your graphics card has it. AMD also jumped into the mix when they announced their latest entry into the RX 6000 series, the Radeon RX 6700 XT. AMD showed the following slide at their presentation which shows that several games “use” more than 8GB of VRAM in certain conditions. The word “use” is intentionally left vague. In order to understand the difference between memory usage and memory allocation, we first have to understand what VRAM is and what it actually does.

What Does VRAM Do?

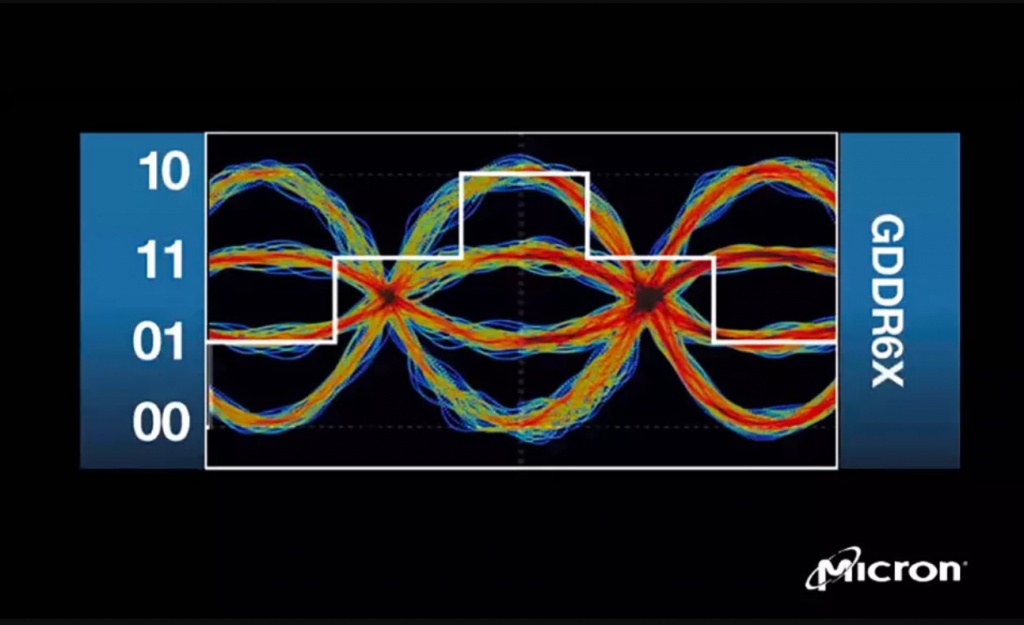

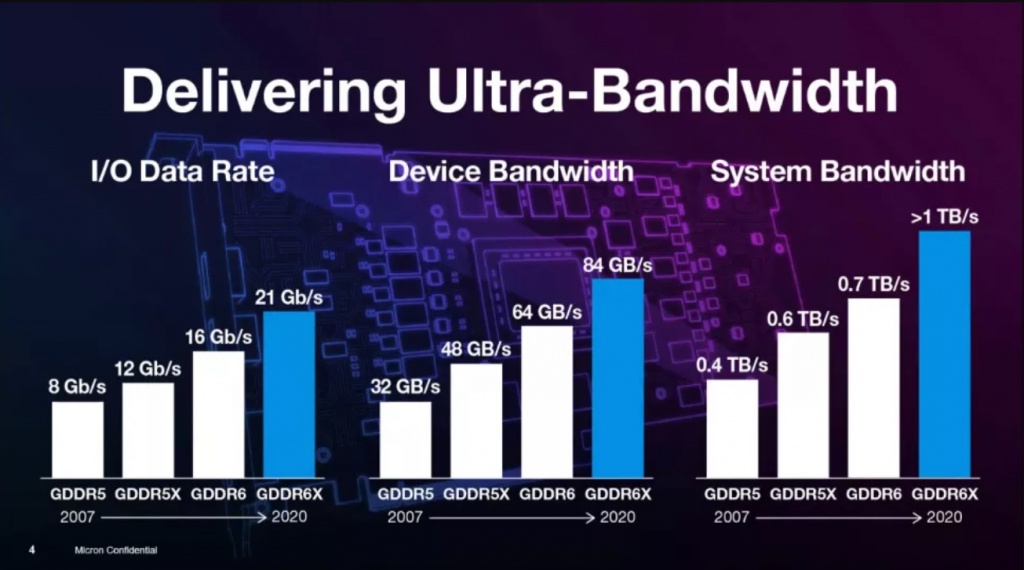

Most of the “heavy-lifting” in terms of graphical processing is done by the core of the graphics card which is known as the GPU. The GPU is a very powerful piece of silicon that is designed and optimized to process graphical tasks such as games. It handles most of the processing that is required in order to push the frames that your monitor displays. But in order to process large amounts of data and prepare the frames quickly enough, the GPU needs something to work on. This is where VRAM comes in. VRAM or Video Memory is a very high-speed memory form that is stored on the graphics card itself so that the GPU has direct access to it. The VRAM stores assets and textures that are required by the game so that the GPU can work on them when needed and prepare the frames that need to be displayed. If the VRAM cannot deliver these assets and other crucial data to the GPU fast enough, the user can experience slowdowns, stutters, or even crashes. Generally, higher resolutions like 1440p and 4K with high graphical settings require more VRAM to manage and store those higher-quality assets, which means that you need a higher capacity of VRAM if you want to play at these settings at these resolutions. Simultaneously, you need higher speed memory in order to move the data to the GPU from the VRAM quickly enough. This is where memory technologies like GDDR6X prove helpful.

VRAM Allocation

VRAM Allocation is a somewhat complicated and vague concept since its actual application varies from game to game and between different developers. Basically, when a game “allocates” VRAM, it claims the entirety of the card’s memory buffer and stores assets and textures on it that it might need later. A good example of VRAM allocation can be found in modern Call of Duty games such as Call of Duty Modern Warfare. Especially in the multiplayer version, the game claims all the VRAM that the card has to offer and fills it to the brim with assets and textures that might be required at some point in the match. This can include different textures of objects in the map, various graphical elements, wireframe maps, etc. Claiming the entire capacity of the VRAM and filling it with admittedly unnecessary assets might seem wasteful, but it does serve a purpose. When assets are pre-loaded into the memory like this, the game does not have to wait for the much slower hard drive or SSD to load the asset when it is required on the screen. The required asset can be quickly accessed from the graphics card’s VRAM which is much faster than any form of storage available today. This allows the game to load textures and assets instantaneously, thereby avoiding any sort of lag or texture pop-in artifacts. The overall gaming experience is, therefore, improved when this technique is used. One should keep in mind that the game does not actually need to store all these assets in the video memory to function properly. If you run Call of Duty Modern Warfare on a graphics card with 6GB VRAM, it will function perfectly fine while allocating the whole 6GB VRAM buffer. Similarly, the game will allocate even as much as 20GB of VRAM if you play it on a graphics card with more than 20GB of VRAM. The game is not actually using all that VRAM to help render the scene, however, the game is storing potentially important assets and textures in the VRAM that might come in handy later on.

Actual VRAM Usage

While some modern games like to allocate the whole VRAM capacity to the game in order to optimize the gaming experience, older games and a large portion of newer games do not adopt this technique. VRAM allocation is a fairly new technique that has become popular in recent years due to the rise of graphics cards with more than 8GB of VRAM. These games use as much VRAM as they actually require to render a scene, and the rest remains unused. VRAM usage can be defined as the amount of VRAM that is actively involved in rendering a scene that is being displayed on the screen or any subsequent scenes for which the game needs immediate access to assets and textures. VRAM usage is the actual measure of the amount of VRAM a game needs to function properly. If a game “uses” 8GB of VRAM under certain conditions, having less than 8GB of VRAM is going to cause severe hitches and stutters or maybe even crashes if those conditions are fulfilled. It should be noted that monitoring softwares like MSI Afterburner do not have the capability to differentiate VRAM Allocation from VRAM usage, and therefore only show VRAM allocation. This means that the readout of the VRAM in these softwares can be wildly different between different computers. If a game such as DOOM Eternal is allocating 12GB of VRAM in a PC with an RTX 2080 Ti, it might not translate directly to another PC that has a graphics card with only 8GB of VRAM. This means that VRAM allocation varies between different PCs with different graphics cards, and cannot be easily replicated across different systems. VRAM usage, on the other hand, can almost always be replicated to a fairly accurate degree across different systems.

How Much VRAM Do You Need?

This is an age-old question that has been repeated with every new graphics card release and is probably going to be repeated again in the future as well. Nevertheless, the answer remains the same; it depends. VRAM usage varies wildly across different games that are built on different engines using various different developmental techniques. One cannot simply assign a VRAM value to a game or a set of games and be done with it. There are a lot of factors that affect VRAM usage in real-life. The biggest factor that affects VRAM usage is your resolution. The three major resolutions of today are 1080p, 1440p, and 4K. VRAM resolution increases quite exponentially when we increase resolution from 1440p to 4K, while the jump from 1080p to 1440p is also quite significant. 4K is the resolution that demands the most VRAM as of today, but talks of 8K gaming are also looming on the horizon. The other factor that affects VRAM usage significantly is the type of games that you are playing on your graphics card. If you are more interested in real-time strategy games, then your VRAM usage is going to be significantly lower than another player who is more interested in open-world games. Similarly, a slightly older AAA title like Assassin’s Creed IV: Black Flag is going to consume a lot less VRAM than the latest entry to the series: Assassin’s Creed Valhalla. There is no rule of thumb to VRAM usage, but if we look at the broad spectrum of games across multiple genres, open-world AAA games are the ones that consume the highest amount of VRAM. Remember, we are talking about VRAM usage here and not VRAM allocation. The in-game settings also affect VRAM usage quite heavily. Most games nowadays offer a whole host of graphical settings that you can change in order to optimize your gaming experience. There are some settings that have quite a significant impact on VRAM usage. Texture Quality is the major setting that you need to look out for when talking about VRAM. Higher quality textures look significantly better than lower quality ones but often consume a lot more VRAM as well which can be a problem for those gamers who are lacking in this department. Shadow quality is another setting that can have a noticeable impact on VRAM usage, and anti-aliasing techniques such as MSAA or Multi-Sample Anti-Aliasing can also significantly increase your VRAM usage.

Is 10GB VRAM Enough?

It is tough to put an exact number on how much VRAM is actually required today. The VRAM requirements of one player can vary vastly from the VRAM requirements of another player. However, a few generalized observations can be made in this regard, keeping in view the VRAM usage numbers of various games in various different resolutions. If you are a gamer that likes to play the latest and greatest AAA game at 4K with all the settings maxed out, then 10GB might just be barely enough for your needs, for now. However, if you are willing to turn down a few settings, or turn down the resolution to 1440p and play at a higher frame rate, then 10GB of VRAM should last you quite a while still. For competitive gamers, and gamers who like to play lighter or older titles, even 8GB of VRAM should be plenty even if you plan on playing at 4K resolution.

Final Words

VRAM allocation is a tricky concept that is becoming more and more popular nowadays with the rise of graphics cards with more than 8GB of VRAM. Allocation is quite a bit different from actual VRAM usage, however, as allocation uses potentially all of the VRAM that is available to it to optimize the gaming experience. On the other hand, VRAM usage is the actual amount of VRAM that is required by the game to render a particular scene. The two parameters are quite different, and this has caused a lot of confusion among gamers simply because they are grouped under the same umbrella of “VRAM”. With more and more games adopting the technique of VRAM allocation, the industry needs to work out a way to differentiate the two in order to remove ambiguity and misinformation that is common right now.

Intel Core i7-8086K Vs 8700K: What Is The DifferenceDashlane Free vs Dashlane Premium: What’s the DifferenceWhat is the Difference Between OTF and TTF?Difference Between Windows PowerShell and Command Prompt